Abstract

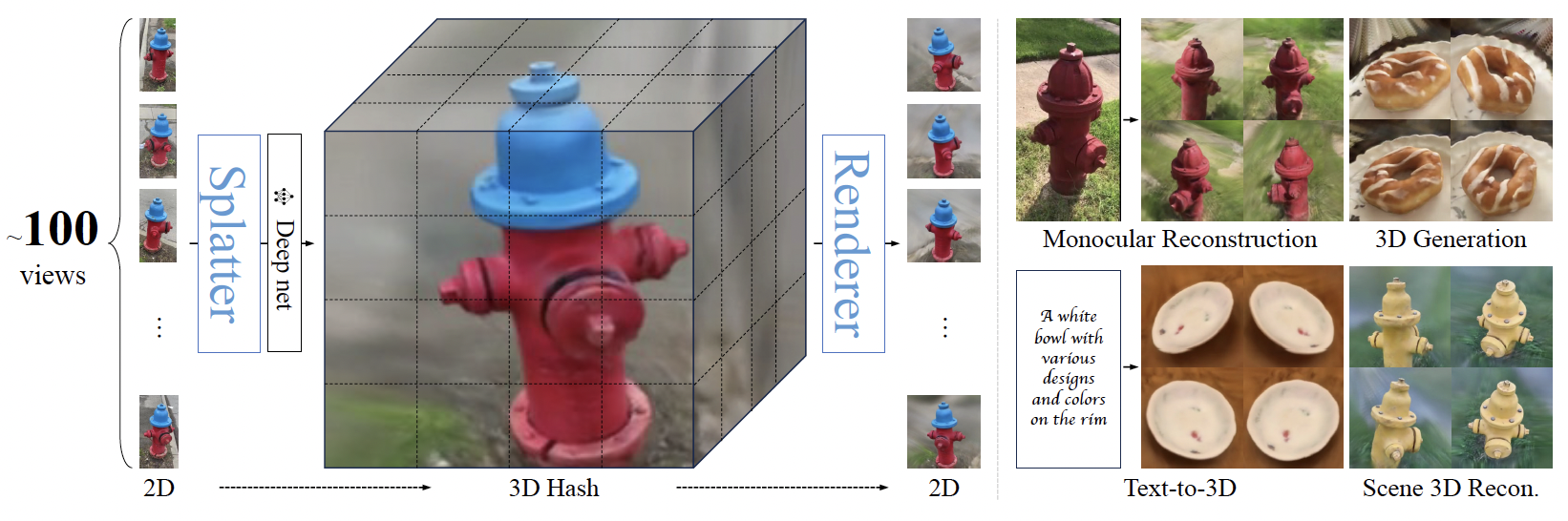

Lightplane Renderer and Splatter are a pair of highly scalable components for neural 3D fields that significantly reduce memory usage in 2D-3D mapping. Lightplane Renderer renders images from neural 3D fields, and Lightplane Splatter unprojects input images into 3D Hash stuctures. Both components work in a differentiable manner and significantly save memory usage.

Contemporary 3D research, particularly in reconstruction and generation, heavily relies on 2D images for inputs or supervision. However, current designs for these 2D-3D mapping are memory-intensive, posing a significant bottleneck for existing methods and hindering new applications. In response, we propose a pair of highly scalable components for 3D neural fields: Lightplane Renderer and Splatter, which significantly reduce memory usage in 2D-3D mapping. These innovations enable the processing of vastly more and higher resolution images with small memory and computational costs. We demonstrate their utility in various applications, from benefiting single-scene optimization with image-level losses to realizing a versatile pipeline for dramatically scaling 3D reconstruction and generation.

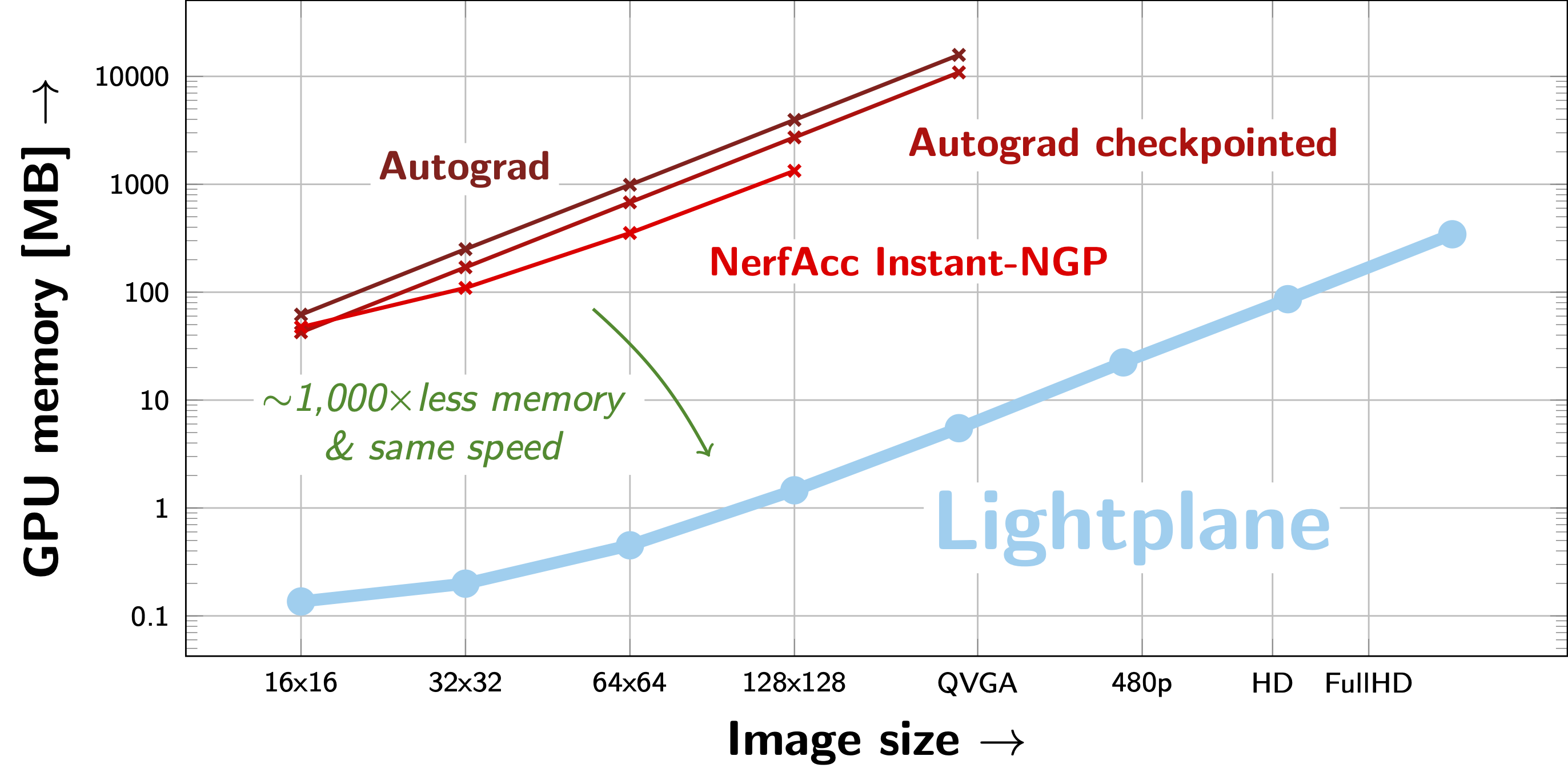

Memory-Efficient Components

Both Lightplane Renderer and Splatter are desinged to be highly memory-efficient, exhibiting over 3~4 orders of magnitude memory saving at comparable speed. Taking rendering as an example, Lightplane Renderer can differentiable render batches of FullHD (1920 x 1080) while consuming less than a GigaByte of GPU memory. All axes are log-scaled.

Generalizable 3D Hash Structures & A Versatile 3D Framework

A big advantage of Lightplane is that it is designed to be generalizable to various 3D hash structures. We experiment it on Voxel Grids and TriPlanes, but it can be easily extended to other structures likes Hash Table and HexPlane (working in progress). It provides a general way for information exchange between 2D and 3D (i.e. rendering and lifting) for various 3D hash structures.

Combining Lightplane Renderer and Splatter, we estiable a versatile 3D framework for 3D reconstruction and generation.

Boosting Large 3D Reconstruction and Generation Models

The memory saving of Lightplane components significantly boosts the scalability of large 3D reconstruction and generation models, by dramatically increasing the size of input and output information. Addtionally, the saved memory can be used to increase the model capacity and batch sizes. These lead to performance improvements in 3D reconstruction and generation.